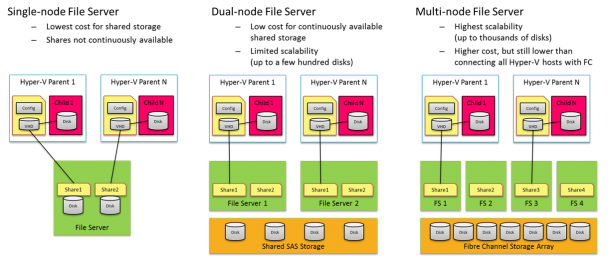

In Windows Server 2012, there is a new capability to leverage SMB File Server for Virtualization and Private Cloud. There three main mode the deployment:

- Single-node File Server - Standalone file server is not highly available, it provides the most inexpensive file server solution

- Dual-node File Server - This is probably most common file server configuration, providing continuous availability (via SMB transparent failover) at a low cost. Using shared Serial Attached SCSI (SAS) storage (just-a-bunch-of-disks [JBOD] s or a SAS-based Storage Array), this solution can scale to a few hundred disks

- Multi-node File Server - This file server cluster is highly scalable. This cluster can leverage features like SMB Scale-Out and SMB Direct to create a shared storage infrastructure to serve dozens and even to hundreds of Hyper-V nodes. Using a 10GbE or InfiniBand to connect the Hyper-V computer nodes to the file servers has huge cost saving potential compare to Fibber Channel.

Below diagram illustrates the these three modes.  To understand in details how SMB file server really works Jeffrey Snover’s Blog Post One of the questions that I get a lot is how about performance? One of the key capabilities that enable great performance is SMB Direct. SMB2 Direct (SMB over RDMA) is a new storage protocol in Windows Server 2012. It enables direct memory-to-memory data transfers between server and storage, with minimal CPU utilization, while using standard RDMA capable NICs. SMB2 Direct is supported on all three available RDMA technologies (iWARP, InfiniBand and RoCE.) Minimizing the CPU overhead for storage I/O means that servers can handle larger compute workloads. Microsoft also published Preliminary performance results . (Check out Jose Barreto’s blog for detail information) Windows Server 2012 Beta results – SMB Direct IOPs, bandwidth and latency These results come from a couple of servers that play the role of SMB Server and SMB client. The client in this case was a typical server-class computers using an Intel Westmere motherboard with two Intel Xeon L5630 processors (2 sockets, 4 cores each, 2.10 GHz). For networking, it was equipped with a single RDMA-capable Mellanox ConnectX-2 QDR InfiniBand card sitting on a PCIe Gen2 x8 slot. For these tests, the IOs went all the way to persistent storage (using 14 SSDs). We tested three IO sizes: 512KB, 8KB and 1KB, all reads.

To understand in details how SMB file server really works Jeffrey Snover’s Blog Post One of the questions that I get a lot is how about performance? One of the key capabilities that enable great performance is SMB Direct. SMB2 Direct (SMB over RDMA) is a new storage protocol in Windows Server 2012. It enables direct memory-to-memory data transfers between server and storage, with minimal CPU utilization, while using standard RDMA capable NICs. SMB2 Direct is supported on all three available RDMA technologies (iWARP, InfiniBand and RoCE.) Minimizing the CPU overhead for storage I/O means that servers can handle larger compute workloads. Microsoft also published Preliminary performance results . (Check out Jose Barreto’s blog for detail information) Windows Server 2012 Beta results – SMB Direct IOPs, bandwidth and latency These results come from a couple of servers that play the role of SMB Server and SMB client. The client in this case was a typical server-class computers using an Intel Westmere motherboard with two Intel Xeon L5630 processors (2 sockets, 4 cores each, 2.10 GHz). For networking, it was equipped with a single RDMA-capable Mellanox ConnectX-2 QDR InfiniBand card sitting on a PCIe Gen2 x8 slot. For these tests, the IOs went all the way to persistent storage (using 14 SSDs). We tested three IO sizes: 512KB, 8KB and 1KB, all reads.

Workload

IO Size

IOPS

Bandwidth

Latency

Large IOs, high throughput (SQL Server DW)

512 KB

4,210

2.21GB/s

4.41ms

Small IOs, typical application server (SQL Server OLTP)

8 KB

214,000

1.75GB/s

870µs

Very small IOs (high IOPs)

1 KB

294,000

0.30GB/s

305µs

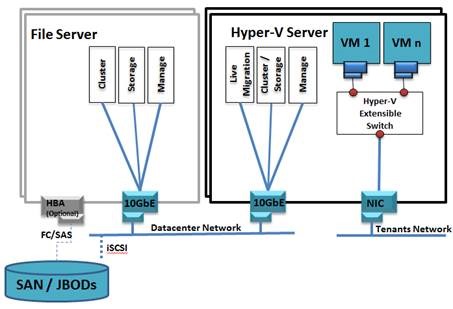

Check out Jose Barreto’s blog for detail information. Last but not least, Windows Server 2012 improves two different approaches on scaling storage in datacenter. Scaling Storage and Compute Independently Windows Server 2012 based continuously available file servers deliver very significant benefits in manageability, performance and scale, while satisfying mission-critical workload needs for availability. All major datacenter workloads can now be deployed to access data through easily manageable file servers. File Servers and Compute resources can be scaled separately. This approach would mean that your cloud infrastructure logically would look like the following diagram:  You can find the configuration document on TechNet describing how to implement this topology. Scaling Storage and Compute Together Now, if you prefer the “Scale together” camp, and you want to take advantage of new Windows Server 2012 capabilities to build that in a more efficient manner, you can now have compute and storage integrated into standard scale units – each comprising a 2-4 nodes and connected to shared-SAS storage. You would use Storage Spaces or PCI RAID controllers on the Hyper-V servers themselves to allow the Hyper-V nodes to directly talk to low-cost SAS disks without going through a dedicated file server, and have the data accessible across your scale units via CSV v2.0, similar to the File Server case. This approach would mean that your cloud infrastructure logically would look like the following diagram:

You can find the configuration document on TechNet describing how to implement this topology. Scaling Storage and Compute Together Now, if you prefer the “Scale together” camp, and you want to take advantage of new Windows Server 2012 capabilities to build that in a more efficient manner, you can now have compute and storage integrated into standard scale units – each comprising a 2-4 nodes and connected to shared-SAS storage. You would use Storage Spaces or PCI RAID controllers on the Hyper-V servers themselves to allow the Hyper-V nodes to directly talk to low-cost SAS disks without going through a dedicated file server, and have the data accessible across your scale units via CSV v2.0, similar to the File Server case. This approach would mean that your cloud infrastructure logically would look like the following diagram:  You can find the configuration guide on TechNet describing how to implement this topology.

You can find the configuration guide on TechNet describing how to implement this topology.